I recently visited a facility that displayed framed “wall art” of funny quotes from famous people. I found the quotes amusing, so I took pictures of all the wall hangings. The problem is, I don’t want to spend the time typing up all those quotes by hand (of course, I’ve probably spent much more time programming an alternative). Anyway, OCR to the rescue!

Windows seems to have a variety of options for OCR, but these all seem largely GUI driven. I’d rather have a command line solution. Enter Tesseract-OCR.

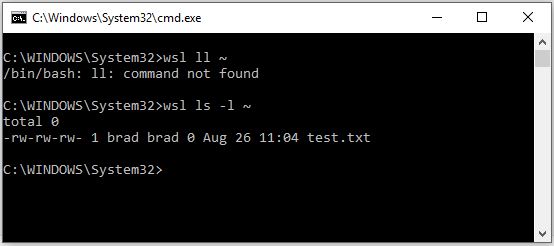

Tesseract is a command line tool used for parsing text from image files. Like most cool tools of its ilk, it works best in Linux. Am I sunk, then, as my main environment is Windows? Nope. I can install tesseract in my Linux sub-system and access it from Windows. Here’s how I solved this problem:

Step 1: Use wsl.exe to run tesseract

I actually ran all my work from a Jupyter Notebook using its shell command feature.

2

! wsl tesseract {image_file} {image_file}

Step 2: Open the results

Tesseract seems to automatically append a “.txt” to the end of the outfile you supply it. Since I supplied it my image filepath, it created a new file, IMG_20180801_150228139.jpg.txt, containing the text it parsed. I can just run “cat” to see the results:

2

3

! wsl cat {out_file}

And here are my results:

Everyone needs to believe in something. I believe I'll have another drink. W.C. Fields The only reason people get lost in thought is because it's unfamiliar territory. Unknown I want a man who's kind and understanding. Is that too much to ask of a millionaire? Zsa Zsa Gabor By the time a man is wise enough To watch his step, he's too old to go anywhere. Billy Crystal There are two Types of people in +his world, good and bad. The good sleep better, but the bad seem To enjoy the waking hours much more. Woody Allen r never forget o face, but in your case I'll be glad to make an exception. Groucho Marx The secret of staying young is to live honestly, eat slowly and lie about your age. Lucille Ball

Not too shabby!

Recent Comments