In a previous post, I provided a simple example of using pandas to read tables from a static HTML file you have on disk. This is certainly valid for some use cases. However, if you’re like me, you’ll have other use cases where you’ll want to read tables live from the Internet. Here are some steps for doing that.

Step 1: Select an appropriate “web scraping” package

My go-to Python package for reading files from the Internet is requests. Indeed, I started this example with requests, but quickly found it wouldn’t work with the particular page I wanted to read. Some pages on the internet already contain their data pre-loaded in the HTML. Requests will work great for such pages. Increasingly, though, web developers are using Javascript to load data on their pages. Unfortunately, requests isn’t savvy enough to pick up data loaded with Javascript. So, I had to turn to a slightly more sophisticated approach. Selenium proved to be the solution I needed.

To get Selenium to work for me, I had to perform two operations:

- pip/conda install the selenium package

- download Mozilla’s gecko driver to my hard drive

Step 2: Import the packages I need

Obviously, you’ll need to import the selenium package, but I also import an Options library and Python’s time package for reasons I’ll explain later:

from selenium import webdriver

from selenium.webdriver.firefox.options import Options

import time

Step 3: Set up some Options

This is…optional (pun completely intended)…but something I like to do for more aesthetic reasons. By default, when you run selenium, a new instance of your browser will launch and run all the commands you programmatically issue to it. This can be very helpful debugging your code, but can also get annoying after a while, so I suppress the launch of the browser window with the Options library:

options = Options()

options.headless = True # stop the browser from popping up

Step 4: Retrieve your page

Next, instantiate a selenium driver and retrieve the page with the data you want to process. Note that I pass the file path of the gecko driver I downloaded to selenium’s driver:

driver = webdriver.Firefox(options=options, executable_path="C:\geckodriver-v0.24.0-win64\geckodriver.exe")

driver.get("https://www.federalreserve.gov/monetarypolicy/bst_recenttrends_accessible.htm")

Step 5: Take a nap

The website you’re scraping might take a few seconds to load the data you want, so you might need to slow down your code a little while the page loads. Selenium includes a variety of techniques to wait for the page to load. For me, I’ll just go the easy route and make my program sleep for five seconds:

time.sleep(5) # wait 5 seconds for the page to load the data

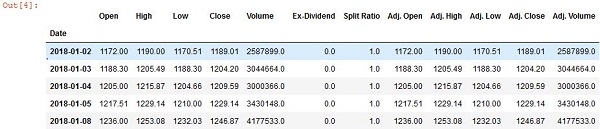

Step 6: Pipe your table data into a dataframe

Now we get to the good part: having pandas create a dataframe from the data on the web page. As I explained in Part 1, the data you want must be loaded in a table node on the page you’re scraping. Sometimes pages load data in div tags and the like and use CSS to make it look like the data are in a table, so make sure you view the source of the web page and verify that the data is contained in a table node.

Initially in my example, I tried to pass the entire HTML to the read_html function, but the function was unable to find the tables. I suspect the tables may be too deeply nested in the HTML for pandas to find, but I don’t know for sure. So, I used other features of selenium to find the table elements I wanted and passed that HTML into the read_html function. There are several tables on this page that I’ll probably want to process, so I’ll probably have to write a loop to grab them all. This code only shows me grabbing the first table:

df_total_assets = pd.read_html(driver.find_element_by_tag_name("table").get_attribute('outerHTML'))[0]

Step 7: Keep things neat and tidy

A good coder cleans up his resources when he’s done, so make sure you close your selenium driver once you’ve populated your dataframe:

Again, the data you’ve scraped into the dataframe may not be in quite the shape you want it to be, but that’s easily remedied with clever pandas coding. The point is that you’ve saved much time piping this data from its web page directly into your dataframe. To see my full example, check out my code here.

Recent Comments